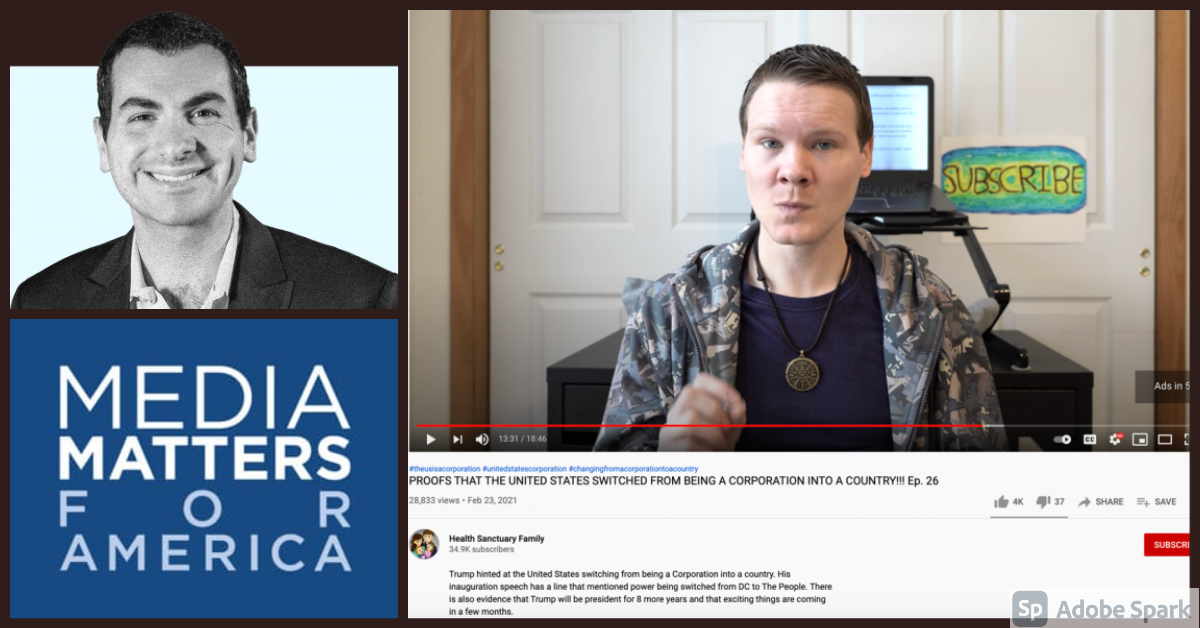

How YouTube continues to allow QAnon conspiracies to live on its platform… and make money from them

Media Matters has continued to call out the massive video-sharing platform for videos that still peddle dangerously false information.

Alex Kaplan, a senior researcher at Media Matters, a progressive media watchdog, has uncovered something very troubling. Through the use of a tracking tool known as BuzzSumo, Kaplan discovered well over 300,000 combined views on videos pushing a QAnon conspiracy theory.

Where were these videos being published? The beloved and widely used self-broadcasting platform, YouTube.

The platform, whether they are aware of it or not, has allowed these videos to exist and gain not only views, but revenue for the creators and YouTube itself, through advertisements.

What is QAnon? How could people possibly become entangled in such incredulous theories? How were such videos able to exist on YouTube largely unchallenged? What are the inherent dangers of the spread of these conspiracy theories?

AL DÍA recently spoke with Kaplan to answer some of these questions. Before diving into his research, Kaplan described what QAnon is, where it originated and why its existence is so treacherous.

“QAnon is this far right extremist conspiracy theory that started on an anonymous message board in 2017 that basically claimed that there was this government insider that called itself ‘Q.’ Q claimed to have some inside scoop that Trump was involved with some secret plot alleging that he was going to target the deep state and take them down,” Kaplan explained.

Over the last few years, the community of QAnon believers and the influence that “Q” has over these believers has grown significantly.

“We started seeing it at Trump rallies, we started seeing multiple violent incidents tied to QAnon murders. And we saw what happened on Jan. 6,” Kaplan said.

At this point, it’s clear to most Americans who were shocked and distressed by the events of Jan. 6, that the QAnon community, as mysterious as it may appear, is a serious threat.

But how did these theorists end up garnering thousands of views with their monetized videos full of alarming misinformation?

There’s been some coverage about people who were once completely enveloped by QAnon but were able to pull themselves out and take control of their minds again. Kaplan was hesitant to directly refer to QAnon as a cult, but did say it had “cult-like tendencies.”

A heartbreaking piece from @AlbertSamaha about what it’s like to have someone you love be in thrall to the Qanon fantasy world https://t.co/Zv07EfLTjl pic.twitter.com/oNKhDl7WMD

— Adam Serwer (@AdamSerwer) March 12, 2021

But what is it that’s drawing vulnerable people into this far-right online community that behaves in a cult-like fashion? When people find themselves in cults, or simply cult-like communities, it usually seems harmless, until it’s not.

It’s akin to a frog slowly being boiled alive; if a frog is suddenly put into boiling water, it will jump out, but if the frog is placed into tepid water that slowly boils, it will not perceive the danger.

Oftentimes, communities like QAnon attract people who are emotionally vulnerable, or lonely and in need of collective support. Adding on to that, 2020 was a chaotic and stressful year that had many people at their wit’s end.

The first few months of the pandemic were scary; people were stuck in their homes, trying to figure out what was going on, and trying to find answers.

Kaplan mentioned the popular subreddit called “QAnon casualties,” where family members shared their stories of “losing” their loved ones to this conspiracy theorist community.

According to Kaplan, the subreddit has grown exponentially.

RELATED CONTENT

“I believe in the past year, there’s over a thousand people there now. And you’ll see stories. I mean, there’s other reporting besides the subreddit on these stories. Like, ‘my mom, my dad, my brother, or my sister got sucked into this and just went into this rabbit hole and is not the same person anymore,’” Kaplan said.

Through his research, Kaplan was able to find about 13 separate monetized videos on Youtube, pushing different QAnon derived conspiracy theories. Kaplan can’t say if he found all of them, but these were the ones with a significant amount of views.

One video, with more than 180,000 views, has an all-caps clickbait title, claiming to have evidence that former President Donald Trump will be re-inaugurated on March 4, 2021, and that he controls the military.

On March 4, 2021, Donald Trump will not be inaugurated. When that day comes and goes QAnons, please abandon this conspiracy fantasyland and return to us. Your families and friends miss you. They’re worried about you. They want you back.

— PatriotTakes (@patriottakes) February 25, 2021

The video description says the video offers “perfect proof that Donald Trump passed a law that gives him power to control the military until March 20, and that he will return to his position on March 4. The video also has ads, meaning that both YouTube and the creator made money from the peddled conspiracy theory.

Another video from the same account is also monetized and describes in detail how the United States has “switch[ed] from being a corporation into a country,” and it mentions the March 4 theory as well.

Is YouTube responsible for letting these videos run unchecked? It’s not that simple.

YouTube is a gigantic, international platform, and Kaplan said it’s very possible that the platform was not even aware of these videos.

Last October, YouTube announced that it was expanding its hate and harassment policies to prohibit content that targets an individual or group with conspiracy theories, like QAnon, that have been used to justify real-world violence.

It also stated that these efforts to refine its policies have helped to curb QAnon-like content by 80% via its search and discovery systems.

So it appears that the platform did not deliberately allow these videos to run, and in fact, they directly violate YouTube’s updated policies.

As far as preventive measures, Kaplan emphasized that this is not a user’s problem; it is Youtube’s problem. But he did say that YouTube’s size can make it near impossible to monitor and police every single video that is uploaded.

“There’s not a ton that individual users can do about a problem that Youtube should be dealing with by themselves. It’s often researchers and reporters doing this job for them, essentially by pointing out, ‘hey, you know, you have this bad conspiracy theory spreading on your platform,’” Kaplan explained.

Dangerous conspiracy theories spreading like wildfire on social media platforms remain an unsolved issue, and one that has experts puzzled on how to attack it. But for now, users have to keep reporting these videos, tweets and comments and reach out to those who may need to ground themselves back in reality.

LEAVE A COMMENT:

Join the discussion! Leave a comment.